RWTH

Johanna Werz

Johannes Zysk

Type: service-human/knowledge

TRL: 4-6

To ensure AI systems are reliable and trustworthy, they must be tailored to human needs rather than forcing humans to adapt to technology. Users trust AI systems when they function effectively and operate correctly, which requires transparency regarding performance and underlying mechanisms. This aligns with the European Commission’s requirements for trustworthy AI: human agency, technical robustness, privacy, fairness, and transparency. The aim in the FAIRWork project was therefore to analyze transparency from users’ perspectives and help developers implement it in their services. The results of our research show that transparency measures can foster trust in AI for (non-technical) end users if applied correctly. Global process explanations, local reasoning of single results, background information, or accuracy communication can change the way a (AI) decision support system is perceived. Our research in FAIRWork on transparent AI and trust identified different system factors that change trust, acceptance, and usage of AI systems positively but also negatively. According to these factors, different options to implement transparency in AI arise. This innovation item provides insights into which measures promote this trust and how they can be applied to develop or to improve AI systems.

IPR / Licence

IP: Authorship

Contact Person

Information

AI systems must be reliable and trustworthy, especially when following democratic approaches. To maintain human autonomy and meet user requirements, systems must be tailored to human needs rather than forcing adaptation to technology. Respecting the end user’s perspective is crucial for developing such systems, a key aspect of the Industry 5.0 concept.

Trust in AI hinges on two questions: Is the system functioning effectively? And is it operating correctly? Addressing these concerns requires transparency in performance and underlying mechanisms. While technological strides have been made in interpretability and explainability, many solutions remain incomprehensible to lay users.

To address these issues, we conducted studies on AI transparency focusing on lay users’ perceptions. A qualitative focus group study was conducted to understand users’ requirements regarding AI transparency. The results are presented below in the section Evaluating AI Transparency through Qualitative Focus Groups.

Furthermore, an additional study compared different types of transparency and their impact on user trust. For more details, see the section Comparing AI Transparency Methods through Quantitative Experiments. Results from both studies led to the creation of a transparency matrix showing how subjective system factors affect the need for various transparency measures to foster trust. This matrix enhances technical developments by ensuring they are implemented with a user-centered approach and can be used by developers beyond our project to ensure transparent AI services. More on that matrix can be found in the Applying Transparency from a Lay User Perspective: Matrix and Guidelines section.

Working with technical partners ensures our findings on transparency are implemented across different AI services. We developed a table comparing aspects of transparency measures tailored to various FAIRWork services based on their use cases, types of AI used, and implementation methods. The results can serve as best practices for setting up AI transparency in different services. More on the specific application of the transparency results to AI service development is described in the section: Applying Transparency to Concrete Services.

Our research findings can improve AI design and implementation in workplace settings beyond our project scope. The following sections introduce our work and address the question of what developers can do to improve AI transparency.

Evaluating AI Transparency through Qualitative Focus Groups

To identify user requirements for AI transparency, a focus group study focused on lay users’ needs beyond technical aspects and examined how different system factors influence these requirements. Participants discussed three fictitious AI applications: a financial investment app, a mushroom identification app, and a music selection app, each representing distinct system factors. A pre-test ensured the apps were perceived according to their assumed system factors. The sessions involved 26 individuals, including 15 women.

In three rounds, participants addressed questions like what the AI app needs to explain, under which conditions they would use the app, and how they would react when they realized that the app was wrong.

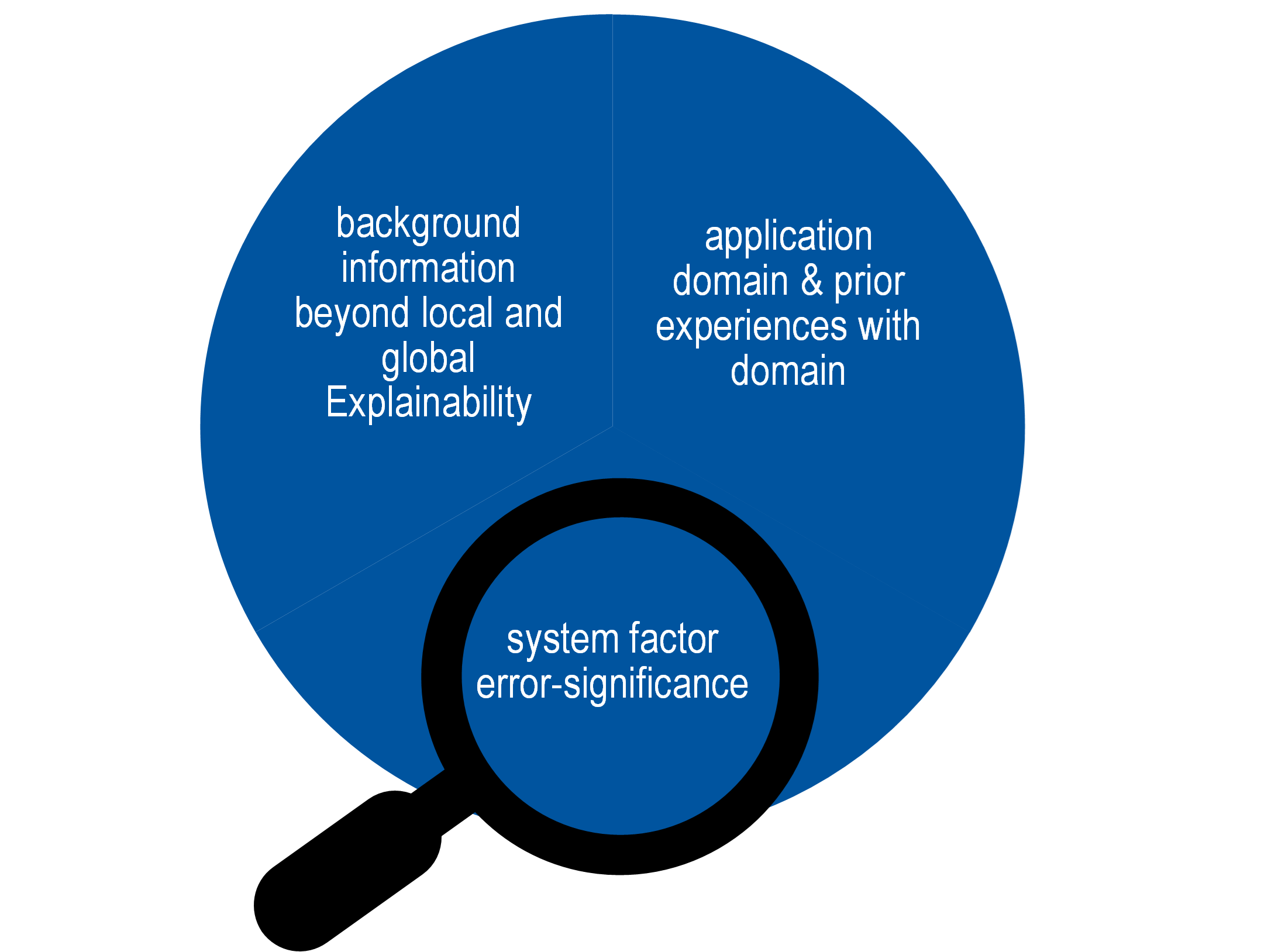

Three key pillars of transparency requirements emerged:

- the domain and prior experiences with systems

- background information beyond local and global explainability,

- and the significance of system errors (see figure below).

Participants’ previous experiences greatly influenced their attitudes and needs for transparency. Skepticism was linked to negative previous experiences, be it with similar apps or with the domain. Familiarity increased the participants’ willingness to test an AI app. Prior interaction with similar systems shaped expectations for transparency in new ones. Transparency concerns often focused on specific aspects rather than entire systems. Security measures, business model information, and data privacy emerged for single app subsystems. Lay users blurred boundaries between global and local transparency, extending concerns beyond explainability.

Error significance notably affected transparency demands; higher perceived error risks led to calls for more background information. Novelty also played a role, e.g. new ways of interaction or mood identification features. That is, user involvement in design processes is crucial for identifying sensitive aspects and tailoring transparency measures.

Overall, transparency requirements varied by AI type, application domain, and users’ past experiences with domains or systems. Transparency demands are dynamic, evolving with system changes and user experiences. Error significance intensified these concerns across all applications, highlighting the need for transparent AI systems accountable to users’ needs.

More Information

More information our innovation item can be found in chapter 3.8 of our D3.3, to be precise

- Chapter 3.8.2:Qualitative Focus Groups about AI Transparency

- Chapter 3.8.4: Matrix and Guidelines on the Application of Transparency from a Lay User Perspective

- Chapter 3.8.6: Practical Application of Transparency in Different DAI-DSS Services

Or you can have a look at our paper:

- Werz, J. M., Borowski, E., & Isenhardt, I. (2024). Explainability as a Means for Transparency? Lay Users’ Requirements Towards Transparent AI. Cognitive Computing and Internet of Things, 124(124). https://doi.org/10.54941/ahfe1004712

Comparing AI Transparency Methods through Quantitative Experiments

Transparency can have paradoxical effects, sometimes even reducing trust based on context, implementation, and target audience. While there is no universal definition of transparency, two main types of transparency can be distinguished: local explanations (specific results) and global explanations (overall system functionality). Local explanations focus on explaining why a single result occurred, while global ones explain the system as a whole. The effects of different transparency types on user attitudes remain inconclusive. Additionally, various technological implementations of transparency have yet to be systematically compared regarding their impact on trust and usage.

To investigate this, a quantitative experiment was conducted with 151 participants to assess how different transparency types affect trust and usage. Four transparency conditions were tested: global functionality, global accuracy, local accuracy, and local functionality, and compared with a non-transparent condition. Participants could use the advice of AI for weight estimations from pictures and provided feedback on their trust in the algorithms. The users evaluated all transparency types. Results indicated that transparency significantly influences both trust and algorithm usage. Trust significantly varied among the four examined types of transparency, in a lesser extent, the usage of the algorithms varied as well. Transparency measures were used and trusted more than the non-transparent algorithm. Global transparency measures that comprised background information such as author of the AI and testing processes proved most effective in building trust.

Overall, the findings suggest that while all forms of transparency enhance trust levels differently, users particularly value general background information about algorithms’ developers and testing processes for establishing initial trust. Local explanations also play an essential role during algorithm use but may not be as effective in fostering initial trust as global measures.

More Information

More information about this can be found in Chapter 3.8 of our D3.3, specifically

- Chapter 3.8.3: Quantitative Experiment Comparing AI Transparency Methods.

A specific paradigm has been developed to test the usage of AI under differing conditions. The method has been described in two publications:

- Werz, J. M., Borowski, E., & Isenhardt, I. (2020). When imprecision improves advice: Disclosing algorithmic error probability to increase advice taking from algorithms. In C. Stephanidis & M. Antona (Eds.), HCI International 2020—Posters (pp. 504–511). Springer International Publishing. https://doi.org/10.1007/978-3-030-50726-8_66

- Werz, J. M., Zähl, K., Borowski, E., & Isenhardt, I. (2021). Preventing discrepancies between indicated algorithmic certainty and actual performance: An experimental solution. In C. Stephanidis, M. Antona, & S. Ntoa (Hrsg.), HCI International 2021—Posters (Bd. 1420, S. 573–580). Springer International Publishing. https://doi.org/10.1007/978-3-030-78642-7_77

Applying Transparency from a Lay User Perspective: Matrix and Guidelines

A matrix and guidelines for AI experts and developers, combining the study results from FAIRWork and answers the questions of: Which aspects of transparency are central for the user group of non-AI-experts? How do transparency requirements depend on system factors?

Based on an AI system’s given system factors, the matrix user can derive 13 implications for AI transparency. These implications show which user needs emerge regarding AI transparency. The transparency implications comprise, for instance, control over decisions, global and/or local explanations, accuracy evaluations, or insights into data processing practices.

The recommended approach for applying the transparency matrix consists of four steps:

- Decomposing the AI into its process steps for detailed analysis: Especially for complex AI system, it is beneficial to decompose them into individual process steps before conducting an analysis. For example, one might identify steps such as (a) data input and (b) suggestions.

- Identification of which subjective system factors apply to the specific system: It is important to note that the system factors are subjective and can be perceived differently by users. For instance, certain individuals may perceive the input of specific data as being sensitive while other have no such concerns.

- Deriving transparency requirements based on these characteristics.

- Evaluating outcomes to verify correct identification of these properties and ensure that transparency measures enhance understanding and support usage.

More Information

More information about this can be found in Chapter 3.8 of our D3.3, specifically

- Chapter 3.8.4: Matrix and Guidelines on the Application of Transparency from a Lay User Perspective, provides more information on this topic. (The matrix is currently being finalized and will be scientifically published in 2025.)

Applying Transparency to Concrete Services

Transparency is an essential prerequisite for trust in an AI system and can be applied differently. The DAI-DSS in FAIRWork combines many different services, all of which were reviewed individually to determine the best ways to enable transparency. This approach enabled us to test the application of our developed transparency matrix in different services and use cases. Our accompanying training materials provide more detailed information on the application of the transparency matrix and the workshops conducted.

More Information

A summary of the results of applying transparency to the services in the FAIRWork project can be found in Chapter 3.8 of our D3.3, specifically

- Chapter 3.8.6: Practical Application of Transparency in Different DAI-DSS Services

Use

Get in touch with us and we will figure out how to apply our results to your services.